Mapping the messy middle

Reality isn't as clear-cut as the pundits make it out to be

The backwards world we're building

Last week I watched a VP of Sales spend forty minutes copying data between spreadsheets while, three tabs over, ChatGPT had just written her entire board presentation. She'll spend another hour this week checking if the AI got the numbers right, then an hour after that carefully copy-pasting each set of bullets into the right text box on PowerPoint and resizing them to fit.

This is the irony builders don’t really like talking about: we're using humanity's most advanced pattern-matching technology to write poetry while the humans who built careers on creativity and strategy are... playing clipboard. Feel the AGI.

Earlier in the centaurprise launch post, I argued we're missing the real opportunity by debating AI versus humans when the real wins come from AI with humans. Today I want to go deeper, because even some of the companies building the best human-AI interfaces are getting the allocation exactly wrong.

The spectrum that's blinding us

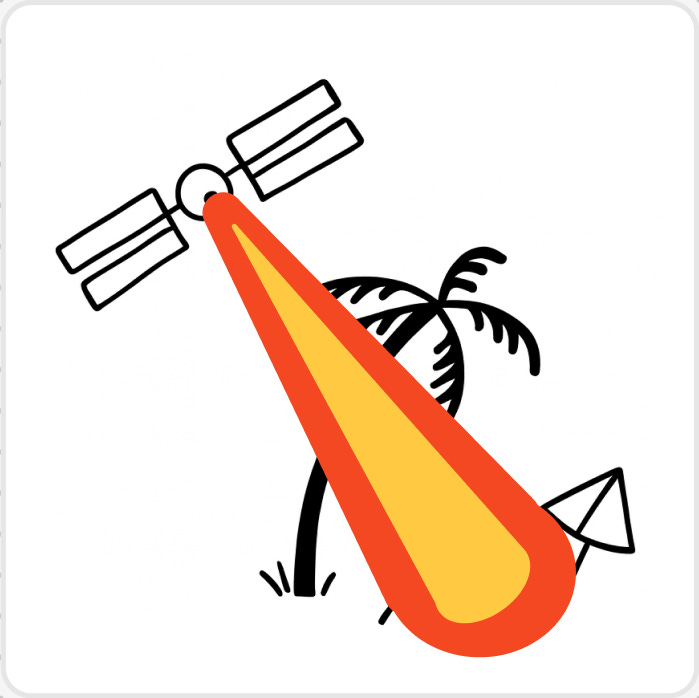

Here's how everyone seems to think about AI right now – as a single spectrum with two endpoints1.

On one end, you've got the AGI believers. AI becomes superintelligent, solves everything, ends labor as we know it. We all sip mai tais on the beach while the machines handle it all. The mechanics? Hand-wavy. The transition? Fiat. The politics? Boring. The funding? Magic. The physical infrastructure? It'll maintain itself, apparently; it’s historically been so good at that.

Some folks on this end think the superintelligence loves us (ergo tiki drinks). Others think it destroys us (ergo death rays). But it’s two variations of the same premise: AI will do everything, humans will do nothing (just that one version of “nothing” is spicier than the other).

On the other end, you've got the bubble hypothesis. These folks hold the whole AI thing has been wholly worthless, propped up by VC subsidies and hype. When the money runs out, we'll realize ChatGPT was just autocomplete with good marketing, and we'll go back to whatever we were doing in October 2022.

This seems... unlikely at this point. I can run Ollama with an open-source model on my laptop right now, hooked into Zed, as my own open-source copilot. For free. Forever. Even if every AI company imploded tomorrow, that capability isn't going away. Some genies don't go back in bottles.

But here's the thing: this whole spectrum is wrong. It's not about how much AI we use. It's about what we use it for.

Opening the second dimension

Instead of a line, think of it as a map.

The vertical axis is optimization – are we using AI and humans for what they're each genuinely good at? The horizontal axis is the first spectrum from before – how much are we actually using AI versus humans?

On the top, you've got a strengths-based future. Humans handle trust, creativity, and judgment. AI handles pattern matching, parallel processing, and repetitive precision. Opportunity cost is minimized; adaptability and flexibility are maximized. Like the centaur chess teams I wrote about, where humans provided intuition while computers provided calculation, both adapt to optimize for their strengths relative to each other and, when they do so, the combined team is way stronger than their homogenous rivals.

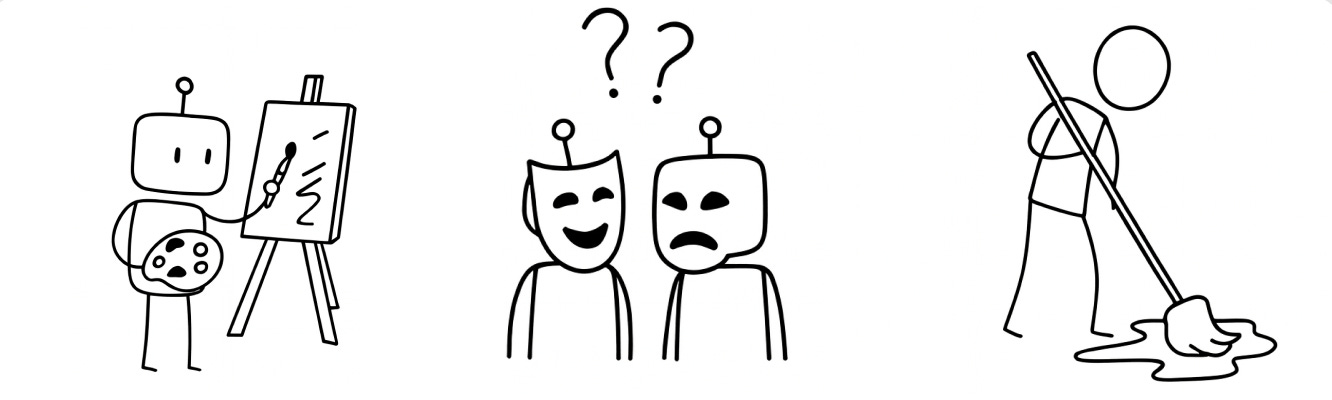

On the bottom edge – where I think we are right now – you've got role reversal. AI drafts beautiful images and sketches strategic documents while humans do the digital dishwashing. AI writes the poetry and architects the systems while humans copy-paste between AI windows that don’t talk to each other. We've given our most interesting problems to pattern matchers while using human intelligence as middleware.

It's absurd when you spell it out. But look at your own day. How much of it is spent cleaning up after AI versus doing the strategic thinking only you can do?

It’s not like we satisfy demand

That's what drives me crazy about all the "AI will take all the jobs" talk. It assumes we've run out of problems worth solving.

Think about October 2022 before the ChatGPT launch. Were we living in a solved world? Had we fed everyone? Connected everyone to the internet? Given everyone education? Handled climate change? Cured the diseases that plague us? Or even more mundane luxuries like: built enterprise software that didn't make people want to throw their laptops out windows?

Of course not. There was, and is, infinitely more demand. The world needs so much work done on it. Work that isn’t getting done because humans are blocked doing other things. Work that requires capabilities we don't have. Work that needs both human judgment and superhuman reflexes or processing power or attention or endurance.

The constraint isn't demand for solutions. It’s capacity to deliver them.

Replacing humans with AI is zero-sum thinking. It assumes the pie is fixed, that the only question is who gets which slice. But that's never been how our economy works: we’ve always had a system that relies on and rewards growth. When we augment human capability – when we give people tools that amplify their strengths instead of replacing them – we expand what's possible.

A sales rep who doesn't have to spend 72% of their week on busywork doesn't become unemployed. They become capable of managing four times as many relationships, going four times deeper with each customer, solving problems they never had time to even see before. They close deals on their long tail of their pipeline that they ignore in the status quo. They grow the business.

What optimization actually looks like

I've been building this future at gigue, watching early adopters reorganize their work around strength-based allocation. It's not theoretical anymore. I'm seeing it happen.

The sales team that uses AI to chase down follow-ups and next steps while humans focus on building relationships and understanding problems: they're not doing less work, they're doing different work. Deeper work. The kind that actually moves deals forward.

The engineering team that uses AI to generate test cases and scan for vulnerabilities while humans create features and design system architecture: they're not becoming obsolete, and they’re avoiding the skill rot you catch when you give yourself over to “the vibes.”

This isn't about efficiency. It's about capability. When you stop using humans as routers and start using them as thinkers, everything changes.

Dragging ourselves upward

The path from where we are (role reversal) to where we should be (optimization) isn't automatic. It requires deliberately rethinking every workflow, every job description, every assumption about who does what.

The centaur chess teams found this out. A 2022 study found what players already knew: amateurs with AIs were beating grandmasters with AIs, because the skillsets were different2. The study also found something no one expected: the best solo AI engines were not the ones that dominated the centaur matchups. In fact, for both sides, being the best by yourself was negatively associated with centaur performance3. Both humans and AI had to adapt to each other and reallocate tasks cleverly to win together4.

Most companies won't do this. They'll keep having AI write strategies while humans copy-paste, because that’s easy. It’s imagination bias, just a one-for-one swap. They'll keep having models attempt creativity while knowledge workers handle digital busywork. They'll keep operating in that bizarre bottom quadrant because it's easier than questioning the fundamental architecture of work and opening the can of worms that comes with it.

But some companies will figure this out. They'll realize that the question isn't "how do we use AI to do human jobs?" but "how do we use AI to do the jobs humans never should have been doing in the first place?"

They'll map every task to its optimal processor. Find the tasks they didn’t know were there but have been tripping them up all along. Pattern matching to AI. Relationship building to humans. Parallel processing to AI. Creative leaps to humans. Repetitive precision to AI. Contextual judgment to humans.

And slowly, iteratively, they'll drag themselves up into that top quadrant where both humans and AI are doing what they're actually good at.

The mission in the messy middle

Scott Belsky had this phrase "the messy middle" for that grinding, oscillating period between initial excitement and eventual success. That's where we are with AI right now. The demos were exciting. The AGI dreams are far away5. We're in the messy middle, trying to make this stuff actually work.

My bet is that the winners will be the ones who stop debating replacement and start optimizing allocation. Who stop having philosophical debates about AGI and start building systems where humans and AI each play to their strengths.

‘Cause here's the thing: we don't need AGI to transform work. We just need to stop using vanilla AI backwards. Stop having it write poetry while we copy-paste. Stop having it attempt strategy while we babysit its outputs. Stop pretending it's human while using humans as machines.

The future isn't AI doing everything or AI doing nothing. It's AI and humans each doing what they're built for. That's not a compromise: it's optimization.

And it's time we started building toward it.

So I feel like I owe Randall Munroe a citation here because, when I uploaded my crappy stick figure sketches into ChatGPT and said “clean this up,” I decidedly got proto-XKCD as an output. Not really my intent but I’m sure those comics are in the DALL-E pre-training dataset so… go read xkcd.com if you haven’t already? I certainly have been for the past two decades.

“These results indicate that the new human–machine capabilities are unrelated, or even negatively related, to humans' traditional chess playing capabilities–the capabilities that machines substitute … Paradoxically, centaur chess players must not rely on their chess playing capabilities, which are inferior to those of the machine, but use other capabilities that allow them to complement the machine's capabilities. Leading players are therefore often not highly rated chess players, but computer engineers with modest chess capability, who approach the game from a computational point of view (Cassidy, 2014). Similarly, the ability to select and tune chess engines in both centaur and machine chess is associated with general data science and creative capabilities rather than with specific chess playing capabilities (Cowen, 2013, p. 86). To develop new complementary resource bundles, humans must have a flexible ability to go beyond domain-specific expertise when structuring, bundling, and leveraging resources (Sirmon et al., 2007)... As our findings show, it is therefore unlikely that actors who outperform in terms of traditional domain-specific capabilities are also able to do so in changed contexts where new and unrelated capabilities determine competitive advantage.” [emphasis mine] https://sms.onlinelibrary.wiley.com/doi/10.1002/smj.3387

“The results of the Bayes factors (BF), the relevant Bayesian Information Criteria (BIC), and the R2 values suggest strong (BF > 20) to very strong (BF > 150) evidence of the absence of non-trivial effects … In other words, human and machine chess playing capabilities have no material impact on chess performance in centaur and engine tournaments.” [emphasis mine] https://sms.onlinelibrary.wiley.com/doi/10.1002/smj.3387

I mentioned this one before - when you create “ensemble models” of humans and AIs together and run evals, the “centaur evals” wind up testing different behaviors than if you test each in isolation. (Sounds intuitive when you say it, but I see almost no one building these eval sets in practice). Position: AI Should Not Be An Imitation Game: Centaur Evaluations | Stanford Digital Economy Lab

A huge part of the problem with “AGI” is that it has no technical definition (just “human-like capability or better”), so the goalposts can move on a whim. AGI can exceed any standard of intelligence we’ve ever known; AGI could just be anything better than employees on PIP. It’s hard to say where we are on the trajectory when there’s no clear destination.